When I first delved into this ocean, it was an exercise involving running Alpaca or Llama on your desktop. Why? Recall when you are sending prompts to ChatGPT, your words are traveling into the someone else’s ether. You can have “private” conversations with your own LLM running locally.

Doing the usual search for ‘How to Install Alpaca & Llama on Windows’ brought up a few YouTube videos. Well, it turns out the instruction I chose (how-to-geek) is already dated. I selected this path because it utilizes Docker, so if things went south it would blow up the container, not my machine. There were many things to install, but I hit a wall with SergeAI. Fortunately, there is a lovely Discord group with a channel for Windows support.

Following the new instructions, I was finally (and excitedly) presented with a menu of a whole bunch of LLMs! It was recommended to start with the smallest LLM (7B) as the system requirements are the smallest (roughly 4.5GB Ram). I chose Guanaco. There was an Alpaca flavor selection, but it was 65B–much too large for my machine specs. The LLMs all pointed to HuggingFace for downloads. Note to self: check this site out later.

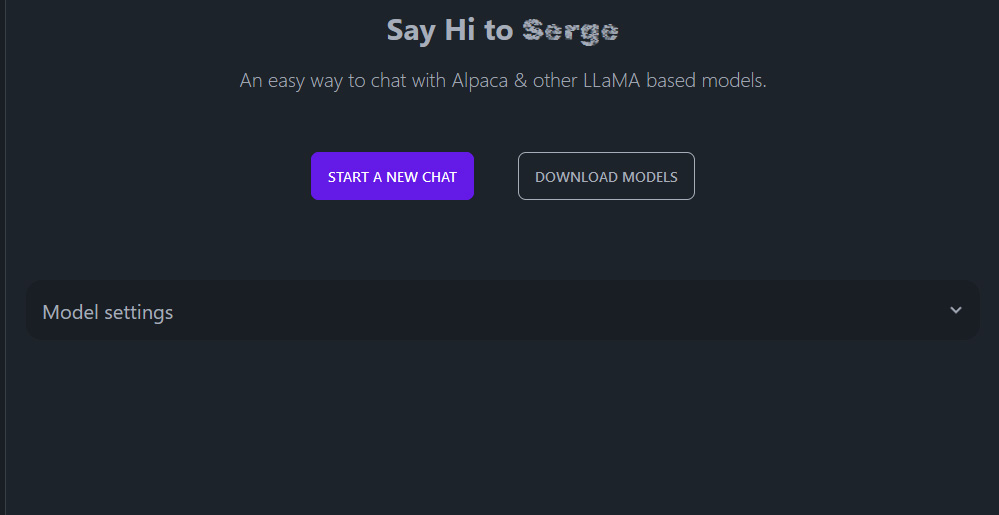

Once Guanaco was setup, you navigate to localhost:8008 and are presented with a browser interface:

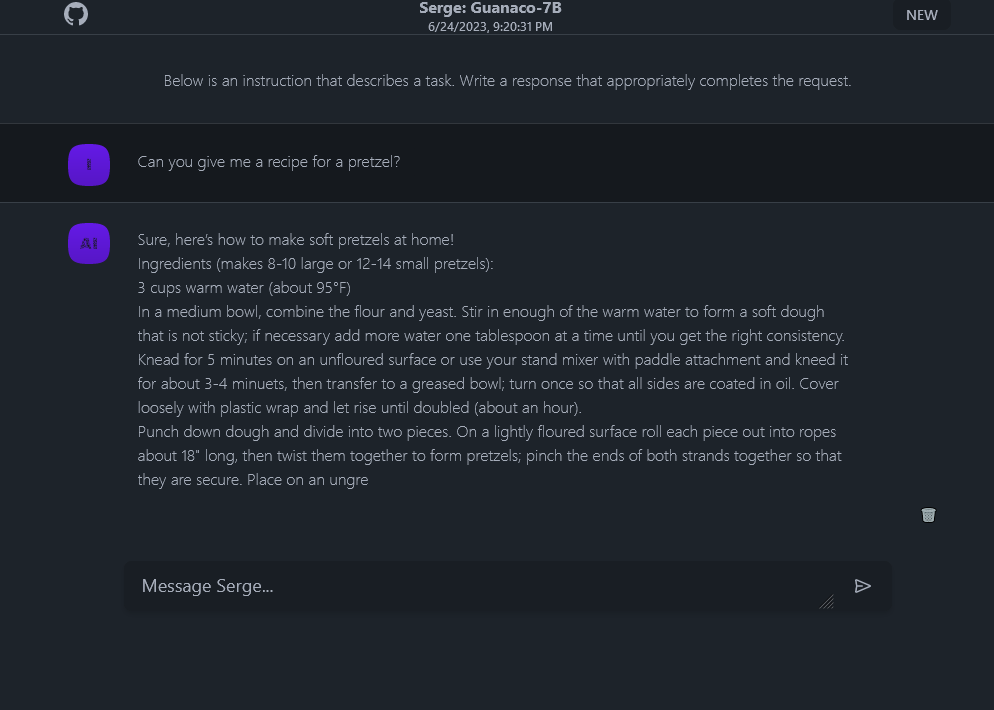

Voila! My first chat with Guanaco was silly:

You can see there is a foundation prompt at the very top, “Below is an instruction that describes a task. Write a response that appropriately completes the request.” You can change this “pre-prompt” in the settings. Also notice that the response is truncated. I’m guessing it’s the default text length. In the Model Settings, the maximum generated text length in tokens is set to 256. You can adjust this up to 512.

Regardless of its limitations, I’m thrilled to have my own LLM!